The return of functional programming

• •Takes 6 minutes to read But first …

What is functional programming ?

Functional programming is a programming paradigm in which most computation is treated as evaluation of functions. It emphasizes on expression evaluation instead of command execution. – Wikipedia

When did it all start ?

In the 90’s, there was a war between declarative programming and imperative programming. Declarative programming then represented by logic programming languages like Prolog and early functional languages like Erlang. And imperative languages were represented by procedural languages like C and object-oriented languages like Perl. These used abstract data types and procedures (sequence of commands) to compute.

You can infer, no doubt that imperative languages won, given the present state of computer languages. One big reason for this is that the process of writing code in an imperative fashion closely mimics the way programs are executed inside the computer. The theoretical basis of the modern computer being the von Neumann computer.

Image by Kapooht - Own work, CC BY-SA 3.0, Link

As you can see, the von Neumann model is closely mimicked by the execution flow in imperative languages. There is a strong correspondence between mutable variables and memory cells, variable dereferences and load instructions, variable assignments and store instructions, control structures and jumps. This sort of intuition helped programmers adopt them faster.

What changed?

It’s time to remember a legend. Gordon Moore. He observed that the number of components on a dense integrated circuit doubles every year. Moore’s Law

Image by Wgsimon - Own work, CC BY-SA 3.0, Link

Over the past few years, the trend has subsided as controlling the current flow in the thin channel is becoming more difficult. We cannot make chips smaller anymore, unless there are revolutionary advancements in technology. To keep up with the growing demand for computer power, we’ll have to add more cores on a chip or use more chips. Huge volume workloads that require horizontal scaling are becoming more common. So we’ll have to write software that better gels with the hardware we have.

There are two facets to this problem

- Parallel programming

- Concurrent programming

Parallel programming means using multiple units of hardware to compute something and speed up the process. But the program itself doesn’t require this, it can still be solved on a single unit of hardware without any loss of functionality apart from speed.

Concurrent programming refers to programs that are inherently concurrent - multiple processes executing at the same time, that need to process large number of requests. Eg. Twitter. This has to be done in real-time and cannot be done in a sequential way.

While using conventional / imperative programming to implement either of these, we face some fundamental problems.

The root of the problem

let’s look at some pseudocode now

var x = 0

async { x = x + 1 }

async { x = x * 2 }

// can give 0, 1, 2The above program has two asynchronous operations running. We can see that the output is non-deterministic. This non-determinism is caused by concurrent threads accessing shared mutable state.

non-determinism = parallel processing + mutable state

Out of these, parallel processing can’t be avoided as our processors aren’t growing anytime soon. Hence we have to avoid mutable state.

The Solution

Here come in functional programming and pure functions.

When is a function pure?

- It returns the same result if given the same arguments (it is also referred to as deterministic)

- It does not cause any observable side effects

So functions that use global objects for example would be impure functions.

In functional programming, the programmer would no longer have to worry about manually iterating values or accessing the elements of a data structure. All those would be hidden away in a declarative expression. This is so helpful that the imperative languages have now picked up some style in their syntax from this.

Example: two ways of iterating in Java. The second line is the modern form of expression

for ( int i = 0 ; i < list.size ( ) ; ++i ) { doSomethingWith ( list.get ( i ) ) ; }

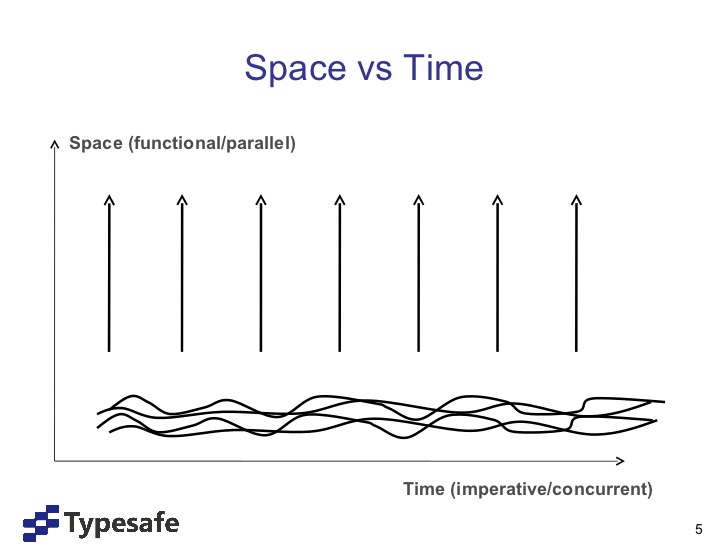

for ( SomeType s : list ) { doSomethingWith ( s ) ; }With programs made mostly of pure functions, there’ll be less mutable state to deal with. We can now think in terms of function compositions. Making one function out of others, this is sort of like thinking in terms of space, whereas imperative programming is thinking in terms of time. You don’t have to worry about concurrent accesses in the functional example, as for each request, it can be served by it’s own space, without worrying about affecting others. No need to worry about race conditions, deadlocks etc.

Example : Scala

A simple example in Scala of how easy it is to parallelize stuff in modern functional languages with built-in functionality for parallelism. This code separates a list of people into minors and adults.

val people: Array[Person]

val (minors, adults) = people partition (_.age < 18)Now the same code parallelized

val people: Array[Person]

val (minors, adults) = people.par partition (_.age < 18)That’s it. No worrying about writing the parallelization code, locks, software transactional memory YADA YADA

The Functional Renaissance

This fundamental compatibility in building concurrent programs has brought back functional programming into the limelight. Now we also have languages that play to the strengths of both the paradigms (Scala). The brevity achieved while writing programs in the recursive way also helps.

Done! 🎉

Additional resources

- Martin Odersky, “Working Hard to Keep It Simple” - OSCON Java 2011

- John Backus’s Turing Award Lecture

John Backus’s Turing Award Lecture was a watershed for the programming-language community because the inventor of FORTRAN, which was the dominant programming language of the day, stepped forward and said that the main stream of programming practice was flowing in a most unproductive direction. His lecture “Can Programming Be Liberated From the von Neumann Style?”

- lambda-the-ultimate Good blog on programming language design